||

EU AI Act Compliance and Risk Management ReportBook for ML Deployments

||

A comprehensive PowerBI solution that transforms complex AI compliance data into actionable insights for ML deployments. The dashboard integrates multiple data sources tracking model performance, compliance scores, bias detection, regulatory deadlines, and user appeals. Features include real-time compliance monitoring, automated risk assessment, bias tracking, and performance metrics visualization. Essential for organizations deploying ML models under EU AI Act regulations, providing a unified view for compliance, risk management, and regulatory reporting.

SQL Server Power BI

The following guide provides step-by-step instructions for creating a studies management software in Excel with the input data visualized in Power BI. The presented data is exported from Excel and the visualizations in PowerBI will update as changes to cell data are made in Excel.

Some key features include:

◍ The ability to manage studies in a very granular and in-depth manner

◍ The ability to modify the platform to accommodate a wide variety of needs and situations

◍ Powerful visualization and insight capabilities

◍ Interactive and easily understandable user interface

Below is an example of the finished studies manager when loaded into PowerBI.

Try the different functions by interacting with the elements on the canvas. By pressing this icon ![]() you can go into full-screen mode. Switch between the different pages of the report here

you can go into full-screen mode. Switch between the different pages of the report here ![]()

Press ESC to return to this page.

Guide for Creating Project

Before diving into the technical implementation of the AI compliance monitoring system, it’s crucial to establish a solid foundation for the project. This phase focuses on defining the fundamental elements that will ensure our PowerBI ReportBook effectively tracks and manages AI compliance under the EU AI Act framework.

During this phase, we’ll outline specific compliance monitoring objectives, set up the development environment with the necessary tools, plan the data architecture, and identify data sources that will feed into the compliance tracking system. This sets the foundation for building a robust solution that can effectively monitor model performance, compliance scores, bias detection, regulatory deadlines, and other features.

In this project, our primary objective is to build a robust AI compliance monitoring system that aligns with the EU AI Act. Clearly defining our goals will ensure that the Power BI ReportBook matches our expectations.

Key Objectives for This Project

By setting these objectives, we establish a clear direction for building a scalable and efficient AI compliance monitoring system in Power BI.

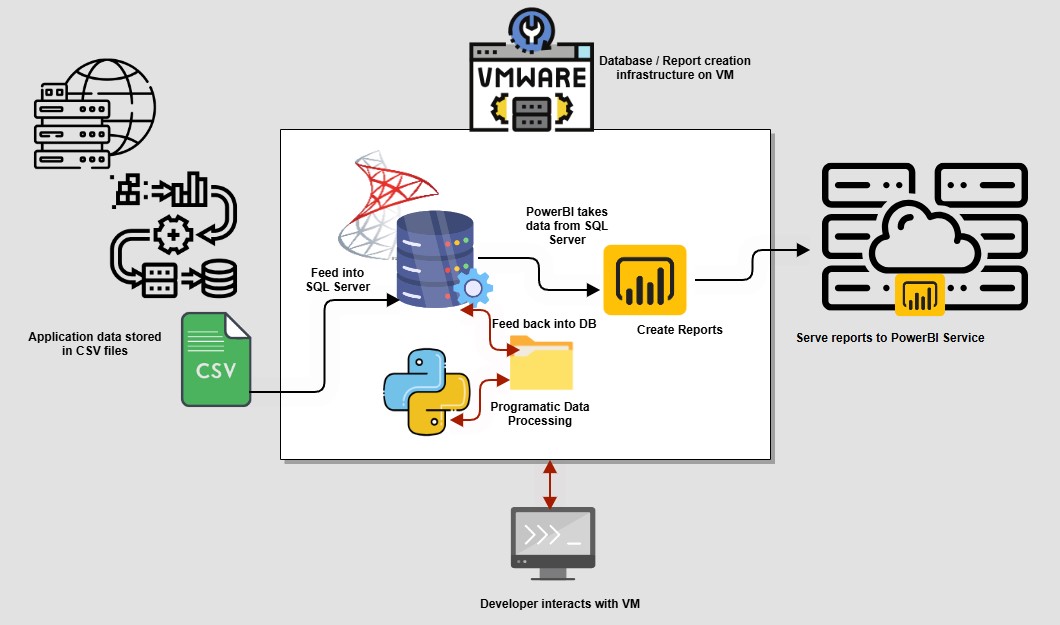

Since our project requires a controlled environment for data processing and compliance monitoring, we will use VMware to set up a Windows Server virtual machine (VM). This VM will host both SQL Server and Power BI Desktop, ensuring seamless integration and live query capabilities.

To make the project as easily accessible as possible, we will use only free versions of the different required software.

VMware Setup

Download VMware Workstation Player (free version):

Windows Server Setup

Download Windows Server 2022 Evaluation (free 180-day trial):

https://www.microsoft.com/en-us/evalcenter/evaluate-windows-server-2022

SQL Server Setup (On VM)

Download SQL Server Developer Edition (free for development):

https://www.microsoft.com/en-us/sql-server/sql-server-downloads

SQL Server Management Studio (SSMS) Setup

This ensures you have a fully featured interface for interacting with your SQL Server database – essential for the importing, querying, and administration steps in the next sections.

Power BI Desktop Setup (On VM)

Download Power BI Desktop (free version):

System Overview

The AI compliance monitoring system will be deployed on a Windows Server virtual machine (VM), hosting both SQL Server and Power BI Desktop. The architecture consists of the following components:

Data Flow Architecture

The system follows a structured data pipeline:

For this demonstration project, we’ll be using a set of prepared CSV files that simulate real-world AI compliance data. While in production these would come from various live systems, our demo files provide a comprehensive dataset for building our compliance monitoring solution. These datasets contain structured compliance-related information, allowing us to build and test the Power BI ReportBook without requiring direct integration with external systems.

Demo Dataset Files

We’ll be working with the following CSV files:

AI_Compliance_Dataset.csv

: Core compliance monitoring data

AI_GDPR_Regulatory_Provisions.csv

: GDPR-specific regulatory requirements

AI_Model_Interaction_Dataset.csv

: Records of AI model interactions and decisions

AI_Performance_Dataset.csv

: Model performance metrics and evaluations

AI_Regulatory_Provisions_Dataset.csv

: AI Act regulatory requirements

Compliance_Audit_Dataset.csv

: Audit logs and findings

Decision_Appeal_Dataset.csv

: Appeal records for AI decisions

System_Incident_Dataset.csv

: Incident reports and resolutions

User_Feedback_Dataset.csv

: User feedback and experience data

The files for the project can be downloaded from the buttons below:

Real-World Data Collection

In a production environment, this data would typically be gathered from various sources:

Automated Sources

Manual Input Sources

Integration Considerations

When implementing this system with live data sources, you would need to:

Data Preparation Steps

Before proceeding with the import, ensure:

With our CSV files prepared, we’ll move on to importing this data into SQL Server for processing and analysis. The variety of our datasets will allow us to create comprehensive compliance monitoring dashboards that cover all aspects of AI regulation requirements.

During this phase, we’ll focus on importing our collection of CSV files containing various aspects of AI compliance data – from model performance metrics to regulatory requirements and user feedback. We’ll implement data cleaning procedures, establish proper data structures, and ensure our datasets are optimized for analysis. This groundwork is essential for creating meaningful insights and maintaining accurate compliance tracking.

The quality of our data preparation directly impacts the effectiveness of our compliance monitoring, so we’ll pay careful attention to data validation, schema documentation, and maintaining referential integrity across our datasets. By following a systematic approach to data preparation, we ensure our PowerBI ReportBook will have a reliable foundation for generating compliance insights and risk assessments.

With our data files cleaned and verified, we’re ready to import them into SQL Server so they can be securely stored, queried, and integrated into our compliance monitoring system. In this step, we’ll create or designate a database to house our tables, then systematically load each CSV file using SQL Server Management Studio (SSMS). By doing so, we’ll be able to leverage SQL queries for data validation, transformation, and the creation of robust compliance dashboards in Power BI.

1. Create a Dedicated Database

Compliance_DB), then click OK to finalize.

2. Use the Import Data Wizard

AI_Compliance_Dataset.csv).AI_Compliance_Dataset), and ensure the column mappings look correct.Tip: If you see unexpected characters or alignment issues, check the CSV’s encoding (UTF-8) and delimiters, then re-import as needed.

3. Repeat for All CSV Files

Perform these import steps for each CSV in your dataset, ensuring each table is appropriately named and consistent with your naming conventions. Doing so will keep your compliance-related tables organized and easy to reference in subsequent phases.

4. Verify the Imported Data

By completing these steps, you’ll have all your CSV data stored in SQL Server, ready for the data modeling and transformation process ahead.

Now that our data is imported into SQL Server, we’ll execute a series of cleaning operations to ensure data quality and consistency across all our compliance-related tables. This step is crucial for reliable analysis and reporting in our PowerBI dashboards.

1. Standardize Text Fields

-- Remove leading/trailing spaces

UPDATE [TableName]

SET [ColumnName] = TRIM([ColumnName])

WHERE [ColumnName] LIKE ' %' OR [ColumnName] LIKE '% '

-- Standardize case for categorical fields

UPDATE [AI_Compliance_Dataset]

SET [ComplianceStatus] = UPPER([ComplianceStatus])

WHERE [ComplianceStatus] IS NOT NULL

2. Handle Missing Values

-- Identify columns with NULL values

SELECT

COLUMN_NAME,

COUNT(*) as NullCount

FROM [Compliance_DB].INFORMATION_SCHEMA.COLUMNS AS cols

LEFT JOIN [TableName] AS data

ON 1=1

WHERE data.[ColumnName] IS NULL

GROUP BY COLUMN_NAME

3. Fix Date Formats

-- Standardize date formats

UPDATE [TableName]

SET [DateColumn] = TRY_CONVERT(datetime2, [DateColumn])

WHERE ISDATE([DateColumn]) = 1

4. Remove Duplicate Records

-- Identify and remove duplicates based on key fields

WITH DuplicateCTE AS (

SELECT *,

ROW_NUMBER() OVER(PARTITION BY [KeyField1], [KeyField2]

ORDER BY [TimeStamp] DESC) as RowNum

FROM [TableName]

)

DELETE FROM DuplicateCTE WHERE RowNum > 1

These cleaning operations should be performed systematically on each imported table, adjusting the specific fields and conditions based on the table’s content and requirements. Document any significant data quality issues encountered and the corrections applied for future reference.

With our datasets imported and cleaned in SQL Server, we now document each table’s schema. This documentation provides a structured overview of the data, including column names, data types, relationships, and usage. Proper schema documentation ensures will help us in the next stage where we refine and re-model the schema to fit the database.

1. AI_Model_Dataset

idcompliance_id| Column Name | Data Type | Description |

|---|---|---|

| model_id | INT (PK) | Unique feedback entry ID |

| compliance_id | INT (FK) | compliance table reference |

| model_name | TEXT | Name of Model |

| model_type | TEXT | Type of model |

| creation_date | DATETIME | Date model was created |

2. AI_Compliance_Dataset

compliance_idmodel_id (linked to AI_Performance_Dataset)| Column Name | Data Type | Description |

|---|---|---|

| compliance_id | INT (PK) | Unique ID for each compliance entry |

| model_id | VARCHAR(50) (FK) | AI model identifier |

| compliance_status | VARCHAR(20) | Compliance result (e.g., "Compliant", "Non-Compliant") |

| regulatory_category | VARCHAR(50) | Relevant AI Act category (e.g., "High-Risk AI") |

| compliance_score | DECIMAL(5,2) | Compliance percentage (0-100) |

| last_audit_date | DATETIME | Date of last compliance audit |

| flagged_issues | TEXT | Summary of compliance concerns |

3. AI_GDPR_Regulatory_Provisions

gdpr_id| Column Name | Data Type | Description |

|---|---|---|

| gdpr_id | INT (PK) | Unique GDPR provision ID |

| provision_name | VARCHAR(255) | Name of the GDPR provision |

| requirement_text | TEXT | Full text of the requirement |

| risk_level | VARCHAR(20) | Associated risk (e.g., "High", "Medium") |

4. AI_Model_Interaction_Dataset

interaction_idmodel_id (linked to AI_Performance_Dataset)| Column Name | Data Type | Description |

|---|---|---|

| interaction_id | INT (PK) | Unique ID for each model interaction |

| model_id | VARCHAR(50) (FK) | AI model identifier |

| user_id | VARCHAR(50) | Identifier for the user interacting with the model |

| decision_output | VARCHAR(255) | Model’s decision output |

| timestamp | DATETIME | Date and time of the interaction |

5. AI_Performance_Dataset

performance_idmodel_id (linked to AI_Model_Interaction_Dataset)| Column Name | Data Type | Description |

|---|---|---|

| performance_id | INT (PK) | Unique ID for performance record |

| model_id | VARCHAR(50) (FK) | AI model identifier |

| accuracy_score | DECIMAL(5,2) | Model’s accuracy percentage |

| bias_score | DECIMAL(5,2) | Bias detection score |

| performance_date | DATETIME | Date of performance evaluation |

6. AI_Regulatory_Provisions_Dataset

regulation_id| Column Name | Data Type | Description |

|---|---|---|

| regulation_id | INT (PK) | Unique ID for regulation entry |

| category | VARCHAR(50) | AI risk category (e.g., "High-Risk AI") |

| requirement_text | TEXT | Description of the regulatory requirement |

| enforcement_date | DATETIME | Date the regulation takes effect |

7. Compliance_Audit_Dataset

audit_idcompliance_id (linked to AI_Compliance_Dataset)| Column Name | Data Type | Description |

|---|---|---|

| audit_id | INT (PK) | Unique audit entry ID |

| compliance_id | INT (FK) | Reference to compliance dataset |

| audit_result | VARCHAR(20) | Audit outcome ("Pass", "Fail") |

| audit_notes | TEXT | Summary of findings |

| audit_date | DATETIME | Date of audit |

8. Decision_Appeal_Dataset

appeal_idinteraction_id (linked to AI_Model_Interaction_Dataset)| Column Name | Data Type | Description |

|---|---|---|

| appeal_id | INT (PK) | Unique appeal record ID |

| interaction_id | INT (FK) | Reference to model interaction |

| appeal_reason | TEXT | User’s reason for appeal |

| resolution_status | VARCHAR(50) | Appeal resolution status ("Pending", "Approved", "Rejected") |

| resolution_date | DATETIME | Date of resolution (if applicable) |

9. System_Incident_Dataset

incident_idmodel_id (linked to AI_Performance_Dataset)| Column Name | Data Type | Description |

|---|---|---|

| incident_id | INT (PK) | Unique system incident ID |

| model_id | VARCHAR(50) (FK) | AI model associated with the incident |

| incident_type | VARCHAR(50) | Category of incident ("Bias Detected", "Performance Drop") |

| incident_description | TEXT | Description of the issue |

| resolution_status | VARCHAR(50) | Incident resolution status |

| incident_date | DATETIME | Date the incident was reported |

10. User_Feedback_Dataset

feedback_idmodel_id (linked to AI_Model_Interaction_Dataset)| Column Name | Data Type | Description |

|---|---|---|

| feedback_id | INT (PK) | Unique feedback entry ID |

| model_id | VARCHAR(50) (FK) | AI model referenced |

| user_id | VARCHAR(50) | Identifier for feedback provider |

| feedback_text | TEXT | User’s feedback message |

| feedback_date | DATETIME | Date feedback was submitted |

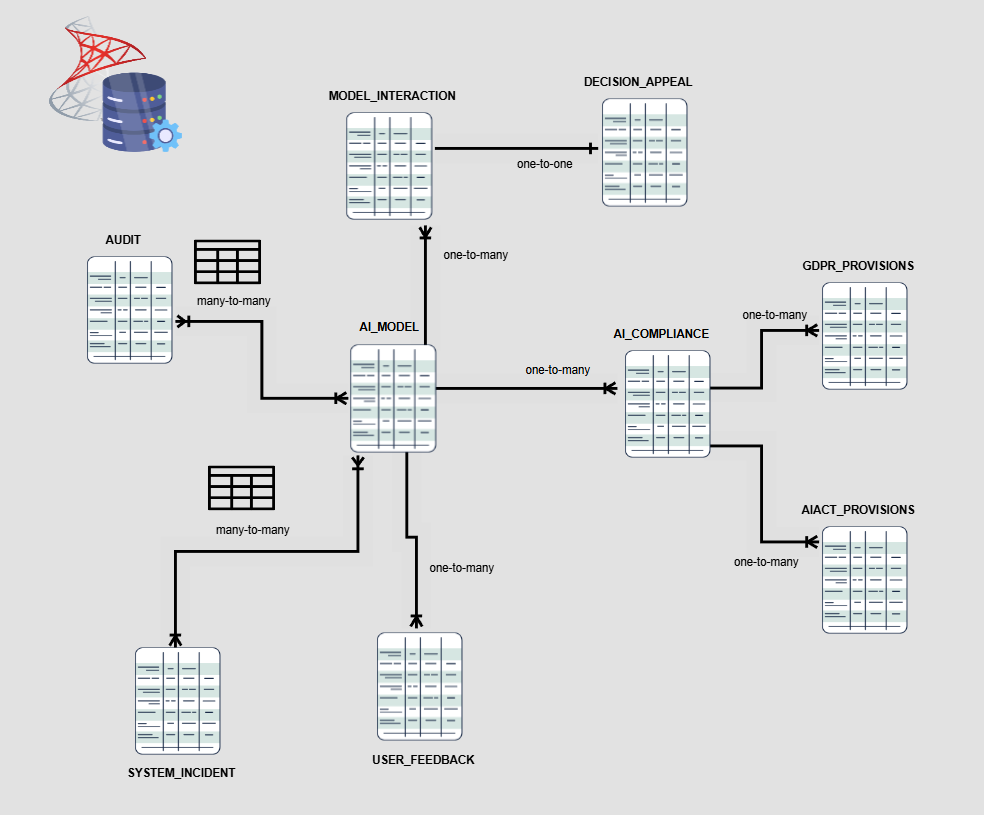

Now that we have our data imported and cleaned, we’ll focus on structuring it optimally for compliance monitoring and analysis. This phase involves creating relationships between our various compliance datasets, transforming data where needed, and establishing a robust schema that supports efficient querying and visualization.

During this phase, we’ll organize our compliance data using established dimensional modeling principles, ensuring that metrics, risk assessments, and regulatory requirements are properly linked. We’ll create appropriate relationships between fact tables (like model interactions and compliance events) and dimension tables (such as regulatory provisions and model metadata), enabling powerful cross-table analysis and comprehensive compliance tracking.

The transformations we implement will focus on:

Establishing relationship keys is essential for creating a structured and optimized data model that allows seamless integration between datasets, ensuring accurate compliance tracking, bias analysis, and risk assessment. In this section, we define the primary keys (PKs) and foreign keys (FKs) needed to connect different tables while maintaining data integrity.

To create an effective data model, we need to identify unique keys in each dataset that establish relationships between compliance data, AI performance, regulatory provisions, and user interactions.

NOTE TO SELF: we have renamed tables (see capitalized in tables)

NOTE TO SELF: we transform model_id etc in tables to be id etc … then on t-sql procedure with ingestion we transform … bulk insert

NOTE TO SELF: The need for one-to-many tables f.ex. in

GDPR_PROVISIONS id → aiact_provision_id AI_COMPLIANCE

AIACT_PROVISIONS id → gdpr_provision_id AI_COMPLIANCE

The following Tables Summarize these relationships for our data.

Primary / Foreign Key Overview

| Dataset | Primary Key (PK) | Foreign Keys (FKs) (Links to other datasets) |

|---|---|---|

| GDPR_PROVISIONS | Provision ID | None (lookup table) |

| MODEL_INTERACTION | Interaction ID | AI Model → AI models in other datasets |

| PERFORMANCE | Performance ID | AI Model ID |

| AIA_PROVISIONS | Provision ID | None (lookup table) |

| AUDIT | Audit ID | AI Model ID → AI models in other datasets |

| DECISION_APPEAL | Appeal ID | AI Model ID, User ID |

| AI_COMPLIANCE | AI Model ID | Regulatory Framework, Compliance Status |

| SYSTEM_INCIDENT | Incident ID | AI Model ID |

| USER_FEEDBACK | Feedback ID | AI Model ID, User ID |

Relationship Mapping Table

| Source Table | Relationship | Destination Table |

|---|---|---|

| AI_MODEL | id → model_id | MODEL_INTERACTION |

| AI_MODEL | id → model_id | AUDIT |

| AI_MODEL | id → model_id | SYSTEM_INCIDENT |

| AI_MODEL | id → model_id | USER_FEEDBACK |

| AI_COMPLIANCE | id → compliance_id | AI_MODEL |

| GDPR_PROVISIONS | id → aiact_provision_id | AI_COMPLIANCE |

| AIACT_PROVISIONS | id → gdpr_provision_id | AI_COMPLIANCE |

| MODEL_INTERACTION | id → interaction_id | DECISION_APPEAL |

Relationship Mapping Chart

organization ID, model ID, date

snowflake schema, data vault v2

fuzzy matching, bridging tables

fact tables, dimension tables, or separate tables

organization ID, model ID, date

organization ID, model ID, date

direct query or import mode

direct query or import mode

This guide provides step-by-step instructions for creating a PowerBI studies management visualization (viz). The viz will automatically update itself if the data inside any of the excel columns are changed.

Here is also a Youtube video that goes through the same step-by-step guide that is presented in written form here.

Under development / More coming soon

direct query or import mode

compliance trends, risk heatmaps, bias metrics, user appeals

manual or scheduled updates

date ranges, model selection, compliance tiers

This guide provides step-by-step instructions for creating a PowerBI studies management visualization (viz). The viz will automatically update itself if the data inside any of the excel columns are changed.

Here is also a Youtube video that goes through the same step-by-step guide that is presented in written form here.

Under development / More coming soon

leveraging data from “risk_assessment.csv”

group fairness metrics, user feedback

incident logs, resolution times

integrated visuals

This guide provides step-by-step instructions for creating a PowerBI studies management visualization (viz). The viz will automatically update itself if the data inside any of the excel columns are changed.

Here is also a Youtube video that goes through the same step-by-step guide that is presented in written form here.

Under development / More coming soon

integrated visuals

(if on-prem SQL) and refresh schedules

compliance drops, risk surges

compliance drops, risk surges

This guide provides step-by-step instructions for creating a PowerBI studies management visualization (viz). The viz will automatically update itself if the data inside any of the excel columns are changed.

Here is also a Youtube video that goes through the same step-by-step guide that is presented in written form here.

Under development / More coming soon

GitHub Actions, Azure DevOps pipelines

Power BI usage metrics, SQL Server logs

additional data sources, advanced analytics

amendments, new regulations